A Deep Dive On Explainable AI

A Deep Dive on Explainable AI

“Consider a production line in which workers run heavy, potentially dangerous equipment to manufacture steel tubing. Company executives hire a team of machine learning (ML) practitioners to develop an artificial intelligence (AI) model that can assist the frontline workers in making safe decisions, with the hopes that this model will revolutionize their business by improving worker efficiency and safety. After an expensive development process, manufacturers unveil their complex, high-accuracy model to the production line expecting to see their investment pay off. Instead, they see extremely limited adoption by their workers. What went wrong?

This hypothetical example, adapted from a real-world case study in McKinsey’s The State of AI in 2020, demonstrates the crucial role that explainability plays in the world of AI. While the model in the example may have been safe and accurate, the target users did not trust the AI system because they didn’t know how it made decisions. End-users deserve to understand the underlying decision-making processes of the systems they are expected to employ, especially in high-stakes situations. Perhaps unsurprisingly, McKinsey found that improving the explainability of systems led to increased technology adoption…”

— Violet Turri, Assistant Software Developer at the SEI AI Division (Carnegie Mellon University)

RESEARCH

The Role of Explainable AI in the Context of the EU AI Act

The proposed EU AI Act has ignited debates on the necessity of explainable AI (XAI) in high-risk AI systems. While some believe that black-box models must be replaced with transparent alternatives, others argue that XAI techniques can help meet regulatory compliance. This research provides insights into the Act's transparency and human oversight requirements and how explainability fits into these frameworks.

The Role of Explainable AI in the Research Field of AI Ethics

A systematic mapping study (SMS) examines the intersection of XAI and AI ethics, revealing that the field remains largely theoretical. While there is considerable research on technical and design aspects, there is a gap in practical implementation. The study highlights the need for a stronger understanding of how business leaders and practitioners perceive XAI, which could bridge the divide between research and real-world applications.

TOOLS

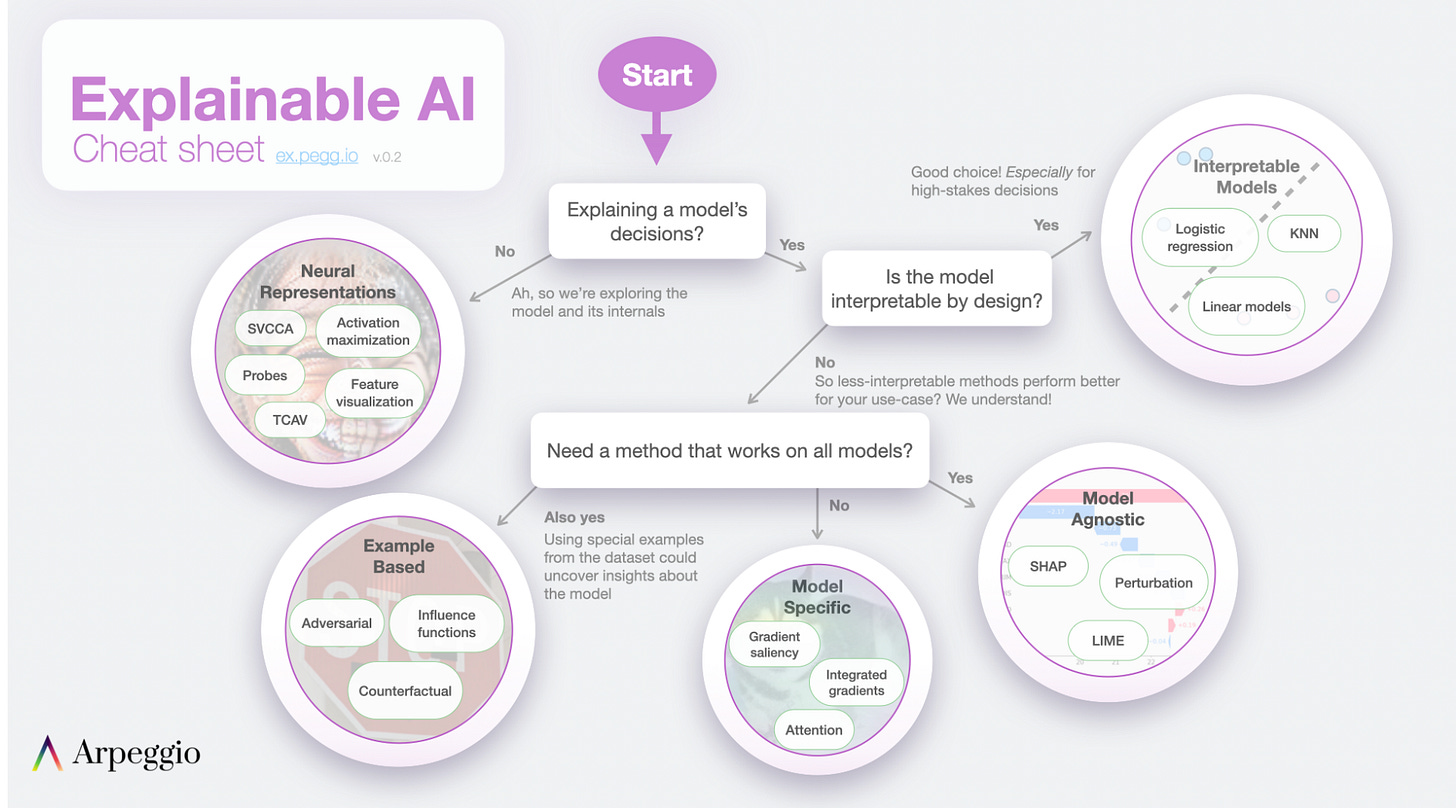

Cheat Sheets

A high-level guide to tools and methods for interpreting AI/ML models:

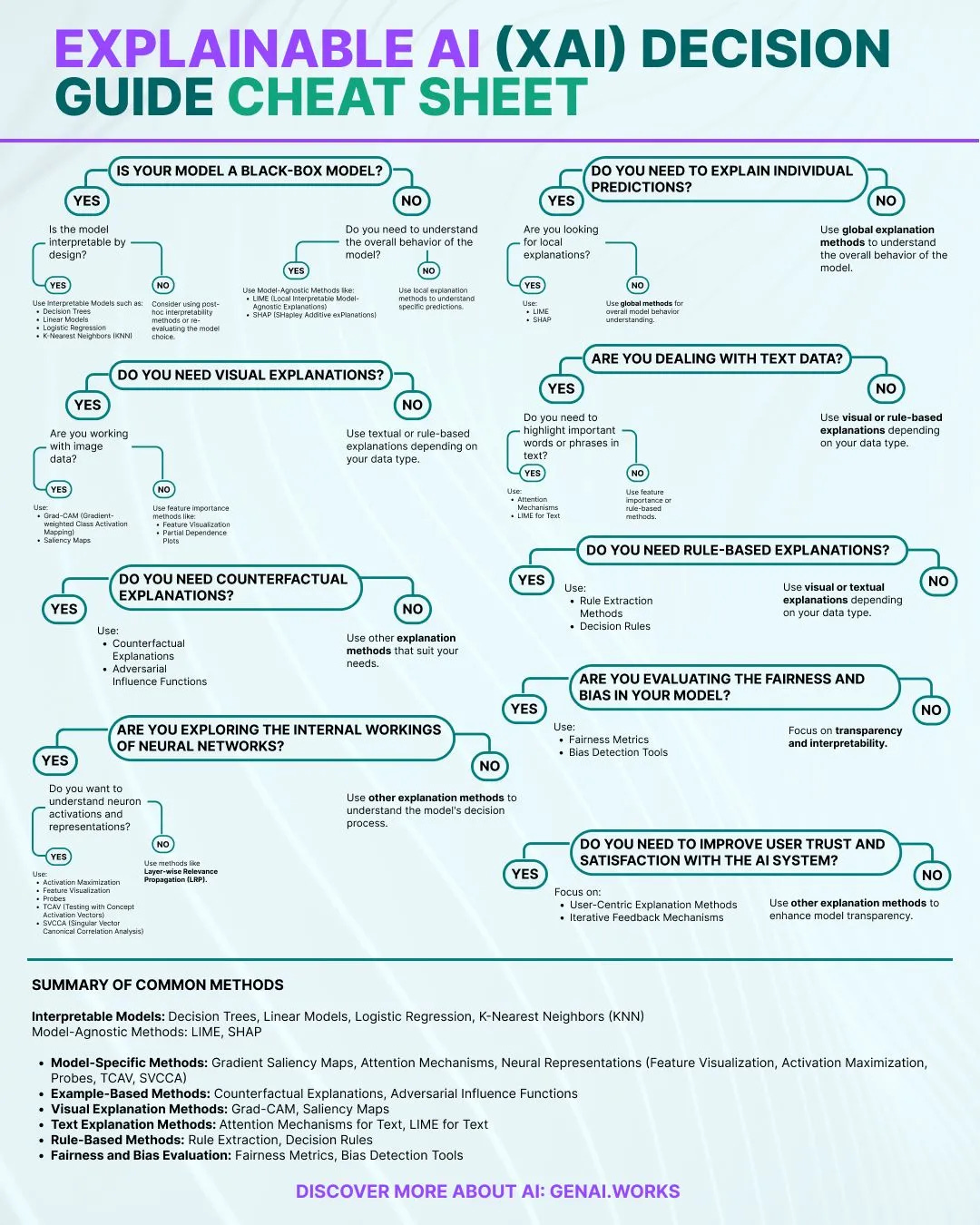

Decision Guide Cheat Sheet

A practical guide for decision-making in XAI:

Programming Libraries

A selection of powerful tools for implementing XAI:

Explainable AI Toolkit (XAITK): A comprehensive suite for analyzing ML models. Visit here

SHAP (Shapley Additive Explanations): A widely used method for interpreting ML predictions. Learn more

LIME (Local Interpretable Model-agnostic Explanations): A tool for understanding complex models. Find it here

ELI5: A Python library for model visualization and debugging. Check it out

InterpretML: An open-source package for interpretable ML models. Explore more

EVENTS

The 3rd World Conference on eXplainable Artificial Intelligence

📍 Istanbul, Turkey

📅 July 9-11, 2025

An international gathering of experts discussing the latest advancements in XAI.

More details here

Bonus Track: The Case for Explainable AI - Forbes

A fun yet insightful take on the importance of explainability in AI. The article draws an analogy from The Princess Bride to highlight how AI systems, like the film's famed swordsman Inigo Montoya, often "have some explaining to do." From loan approvals to customer service, the piece underscores why transparency in AI is more critical than ever.